Don’t ring the ‘dorbell’; no one’s home.

I was looking through my logwatch log one day, and I came across some of the strangest looking hits I’ve ever seen.

/Ringing.at.your.dorbell!: 1 Time(s)

Looking at the original other_vhosts_access.log file, I saw:

www.johndstech.com:80 125.25.26.121 - - [12/Jul/2015:10:30:31 -0600] "GET /Ringing.at.your.dorbell! HTTP/1.0" 404 31386 "http://google.com/search?q=2+guys+1+horse" "x00_-gawa.sa.pilipinas.2015"

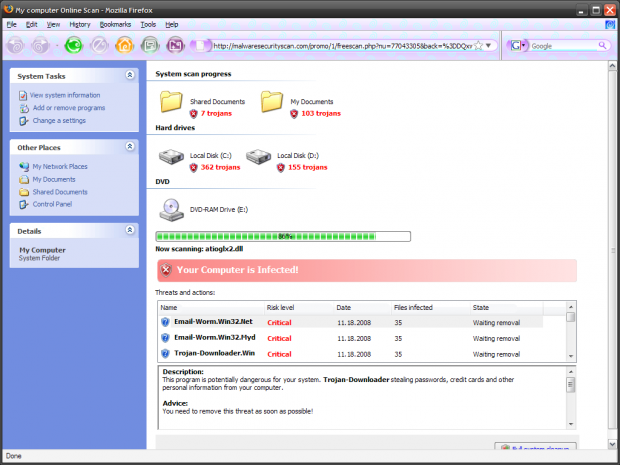

My first thought was that it was some sort of strange joke, but it occurred again the next day, and so it became obvious it was something worth looking into. As it turns out, this is an attempt at exploiting the shellshock vulnerability. Script kiddies aren’t too bright, so they just copy and paste old vulnerabilities and try over and over again. So, how best to block stupid URLs like this?

I could have elected to block the traffic by referrer, but weighing the pros and cons of this came down on the con side for me. After all, the referrer isn’t necessarily what I want to block, and the skepticism.us link already points out two of them. No, I want to block stupid URLs, not the referrer.

Well, a little research came up with ModSecurity, aka mod_security. It seemed like the ideal choice, and it uses Apache syntax and config files. So, I proceeded to implement it and hit a wall — hard. I banged my head here, and I banged my head there, but all I got was a headache.

That’s because I was wasting my time, at least a couple of hours. It turns out to not be so WordPress friendly. That actually makes little sense, since the examples almost always consist of using a PHP test script to block a MySQL injection! The work around is to exclude the WordPress directories! That makes absolutely no sense when WordPress is the main platform!

So, I was without a means to block stupid URLs. Fail2ban blocks IP addresses, but only after failed access attempts, particularly bad logins. However, it turns out that there still are a couple of rather reactive alternatives.

Method 1: Iptables

The best seems to be to use iptables. Granted, that is a little intimidating, but fortunately there are plenty of examples on the web.

The best one I’ve seen so far is “Linux : using iptables string-matching filter to block vulnerability scanners” at SpamCle@ner. It is easy to follow, although I’ll admit I only followed the first part of it. The downsides they point out is that it will always filter port 80, but since I use ufw and other stuff that seems like a minor downside, and “it can cause errors (“false positive”)“, which seems very unlikely for the type of junk we are talking about here.

On the upside, this blocks the traffic before it even hits the Apache server. On the downside, you have to find a way to save it, else the rules disappear upon a server reboot.

There is a workaround for this, and it involves installing iptables-persistent. Read up on this and how to save the iptables at “Saving Iptables Firewall Rules Permanently“.

2. Ban repetitive stuff from logs

If you want a lower quality type after the fact sort of ban, you could also implement a script that does some of the repetitive blocking for you. For example:

#!/bin/bash

echo "Must run as root!"

LOGFILE="/some/path/to/idiot.log"

banhttp()

{

for II in $(grep "$1" /var/log/apache2/*.log | cut -d' ' -f2 | sort -u)

do

ufw insert 5 deny from "$II" >> "$LOGFILE"

echo "$II" >> "$LOGFILE"

done

}

banwp()

{

for II in $(grep "$1" /var/log/auth.log | cut -d' ' -f11 | sort -u)

do

ufw insert 5 deny from "$II" >> "$LOGFILE"

echo "$II" >> "$LOGFILE"

done

}

for JJ in 'POST /xmlrpc.php' '/Diagnostics.asp' '/Ringing.at.your.dorbell!' \

'/wiki/Five_Weird_Tricks_for_Stair' '/wiki/What_You_Need_to_Understand_About_Cardio_Training'

do

banhttp "$JJ"

done

for JJ in 'PaigeBiehl3540' 'Sabina27Y002' 'FrancineSmith23'

do

banwp "$JJ"

done

In theory, this latter method might be easier to maintain for newbies, but over time I suspect that this could become unwieldy. OTOH, it requires no direct fiddling with iptables, saving them, etc.

In reality, the best approach might be a hybrid one. Some of the more obvious could go into iptables directly, especially when they are so common that you are blocking stupid stuff every day and/or they are obvious hacking attempts. Some of the less serious stupid stuff could just go into the script which will block IPs that keep banging on links that don’t exist, never have existed and just plain are tiring to look at.